Summary

- Google audio features are being enhanced with the introduction of audio overviews that now appear directly within Google AI Search, where an integrated audio player delivers spoken summaries of search results for a more interactive and convenient user experience.

- The feature, internally described as this is a test search, reflects Google’s broader push toward accessibility and innovation in Google search by incorporating test audio capabilities that allow users to engage with search results in a voice-based, hands-free format.

- Through the new audio search functionality, users can listen to key information using audio player interfaces, with support extending to Google Home Audio devices, offering seamless integration into smart home ecosystems.

- This transformation in Google AI Search uses intelligent audio source detection and machine learning to anticipate user intent, streamlining how people search Google using verbal queries instead of traditional text input.

- With every audio test, Google advances its mission to create more intuitive interactions, reducing friction in the search process by providing intelligent audio overviews and on-demand summaries via the audio player experience.

- The evolution of Google audio technologies positions the company as a leader in conversational search, as audio source tools merge with AI-powered platforms to redefine how users connect with information.

- Overall, test audio, audio search, and audio source enhancements within Google search mark a critical leap toward personalized, AI-led discovery, where features like Google Home Audio and the integrated audio player will become everyday tools for global users.

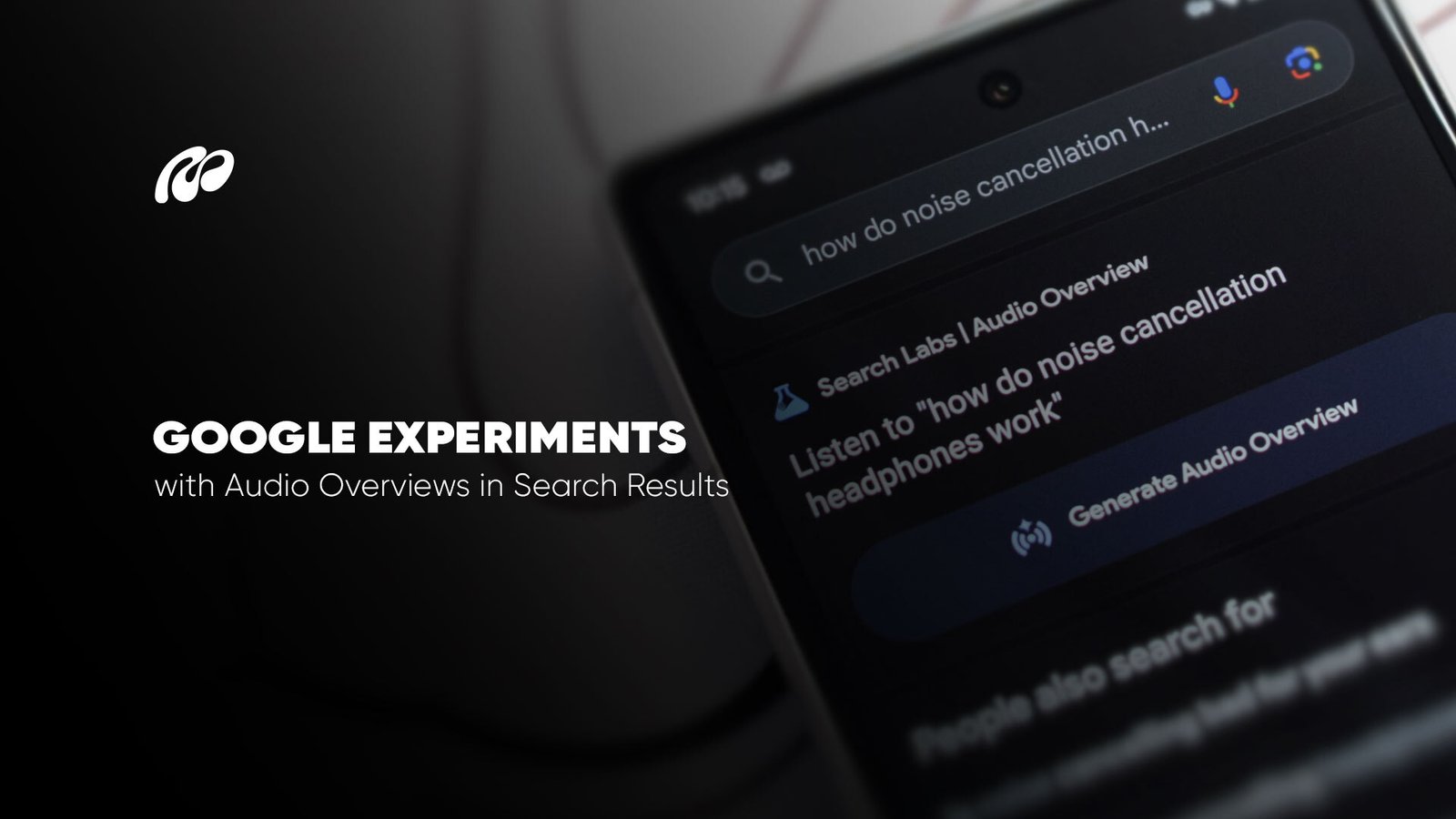

Google is currently experimenting with a new feature that could reshape how people engage with search results: audio overviews. Instead of relying solely on traditional text summaries, this update introduces an audio player within the search results page, enabling users to hear concise, AI-generated responses read aloud. The feature is still in early testing, internally labeled as “this is a test search”, but it points to a future where audio search becomes a core part of the search experience, especially on mobile and voice-enabled platforms.

By integrating voice content into search, Google AI Search expands its role beyond screen-based interaction. This move supports multitasking users, those with visual impairments, and a growing segment of people who prefer listening over reading. It also fits within Google’s broader vision of making search more conversational and context-aware, a vision strengthened by its Gemini AI model and deepening reliance on multimodal input.

Meanwhile, the broader AI landscape is evolving in parallel. OpenAI’s testing of ChatGPT connectors for Google Drive and Slack reflects a similar direction, embedding intelligent language models directly into everyday tools to simplify how users retrieve, manage, and interact with content. This convergence of AI-driven features across platforms suggests that the way people search Google, manage data, and consume content is becoming increasingly fluid, adaptive, and driven by voice and contextual understanding rather than typed input alone. The introduction of audio source recognition and speech-based summaries is just the beginning of a much larger shift in how we access and experience information online.

Audio Overviews Are Coming to Search Results

Google is actively reshaping how users engage with its search engine by introducing audio overviews, AI-generated spoken summaries embedded directly in search results. This update allows users to listen to concise, voice-based responses through a built-in audio player, rather than reading traditional text summaries. The aim is to create a more natural and seamless experience for people who prefer to engage with content via audio search, especially when using mobile devices, Google Home audio systems, or when multitasking during daily routines.

The introduction of spoken responses is part of a much larger transformation in how Google is designing its AI capabilities around user behavior. A major signal of this shift was the company’s decision to replace Google Assistant with Gemini, a more advanced, multimodal AI system. Unlike its predecessor, Gemini handles a wider array of inputs, including text, voice, and visuals, while improving accuracy in interpreting various audio sources. This makes it a natural foundation for voice-first features like audio summaries in search, where accurate contextual understanding is crucial.

Meanwhile, Google is also expanding its AI offerings to younger users. Through its Gemini for Kids, the company has begun allowing children under 13 to interact safely with its AI tools, providing educational and conversational engagement in a controlled environment. The move underlines how Google AI search is being tailored to serve a more inclusive audience, not just adults in professional or casual settings, but also families and students seeking adaptive voice-based technology.

These shifts are part of a broader AI strategy detailed throughout the Mattrics news section, where updates reflect a growing emphasis on intuitive, voice-led interaction models. As Google audio tools continue to evolve, audio search is poised to become more than an optional feature; it’s becoming central to how users search Google, interact with results, and access knowledge in the AI-driven digital landscape.